AI Chip Market Size, Share & Growth Analysis: The Future of Intelligent Computing

The AI chip market is no longer a niche corner of the semiconductor industry — it has become one of the fastest-growing, most strategically important technology markets. From hyperscale datacenters training massive language models to tiny edge devices running on-device inference, a diverse set of chips — GPUs, CPUs, FPGAs, NPUs, TPUs, custom ASICs (Trainium, Inferentia, Athena-style ASICs), memory stacks (HBM, DDR), and networking adapters — are being designed and deployed to satisfy different performance, power, latency and cost trade-offs. This post walks through market size and share signals, breaks down segmentation by offering and function (training vs inference), and highlights the trends shaping the next five years.

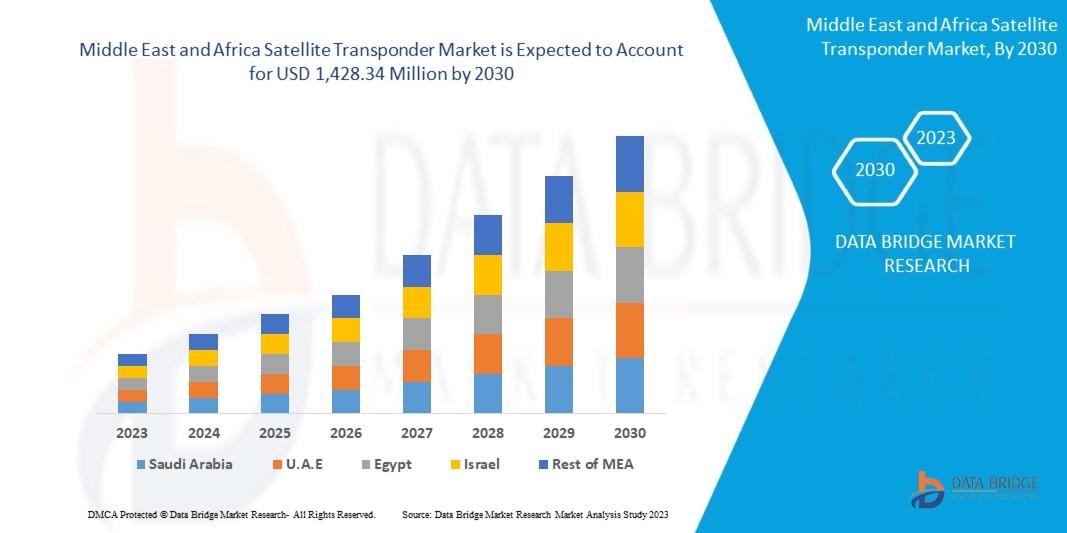

Market size and headline numbers

Independent market estimates vary by scope and definition (AI chipset, AI accelerators, AI hardware), but they all tell a consistent story: a very large and rapidly expanding market. Recent market reports peg the broader AI chip/hardware market in the hundreds of billions over the next decade — for example, one industry report projects the AI chip market to more than double from roughly USD 123 billion in 2024 to ~USD 311 billion by 2029.

Why the market is growing so quickly

Three macro forces are driving adoption and spending on AI chips:

- Explosion of generative AI and large models. Training modern large language models (LLMs) and multimodal architectures requires massive compute with very high memory bandwidth and large on-chip / on-package memory (HBM). Hyperscalers and AI labs are buying specialized training accelerators in huge quantities.

- Shift from cloud-only to hybrid deployments. Real-time GenAI services, edge inference for low-latency apps (AR/VR, autonomous machines), and on-device privacy demands mean inference compute is moving closer to users — creating demand for efficient inference accelerators (NPUs, optimized ASICs, edge GPUs).

- Verticalization and vendor divergence. Cloud providers and large enterprises are designing their own chips (AWS Trainium/Inferentia, Google TPUs, Apple/Meta custom silicon) to optimize cost and power per workload, fragmenting the market but also expanding total addressable spend. Recent reports show that some customers still favour GPU ecosystems for performance and maturity, keeping competition dynamic.

Download PDF brochure -https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=237558655&utm_source=linkedin.com&utm_medium=social&utm_campaign=sourabh

Segmentation by offering (what the market is buying)

AI chip offerings can be grouped into several functional families — each addresses distinct workload needs:

- GPUs (General Purpose GPUs): The dominant platform for both training and high-performance inference today. GPUs excel at large matrix ops, have mature software (CUDA, cuDNN, ecosystem tools), and continue to see architectural refinements (HBM integration, sparsity support). Many hyperscalers base large-scale training farms on GPUs.

- CPUs (x86, Arm): CPUs remain essential for orchestration, preprocessing, and light-weight inference. Arm-based server CPUs are gaining traction in data centers for power-efficient inference nodes, and some forecasts expect Arm architectures to take a larger share of data center shipments as software adapts.

- FPGAs: Used where reconfigurability and low latency are important (telecom, specialized inference pipelines). FPGAs fill niche roles where customization beats fixed-function ASICs and where iteration speed matters.

- NPUs / Edge Accelerators: Purpose-built neural processing units are optimized for on-device inference (vision, voice, sensor fusion). They trade raw flexibility for power efficiency and are crucial for battery-powered devices.

- TPUs and Cloud ASICs (Trainium, Inferentia, Athena-style ASICs): Cloud-provider ASICs are engineered for scale — maximizing throughput per dollar in their infrastructure. They can undercut general-purpose solutions on cost per token or per-training step for workloads tuned to their instruction sets.

- Other custom ASICs (e.g., Athena, MTIA, LPU): A growing ecosystem of startups and system vendors designing specialized accelerators for model sparsity, mixed-precision, low-latency inference, and domain-specific tasks (speech, recommendation engines).

- Memory (HBM, DDR) and Network (NICs, interconnects): Memory bandwidth (HBM stacks) is a gating factor for model training performance; interconnects and smart NICs (RDMA, GPUDirect-style fabrics) determine how efficiently clusters scale. These “supporting” components are critical revenue streams tied to AI chip deployments.

Function: training vs inference — different economics, different winners

The AI chip market divides cleanly by function:

Training

- Needs: maximum floating-point throughput, very high memory bandwidth, multi-chip scaling (NVLink, CXL, proprietary interconnects), and software stacks that support distributed training.

- Typical chips: high-end GPUs, datacenter ASICs (TPU v4/v5 class), large NMIs with HBM on-package.

- Economics: training is capital-intensive and dominated by hyperscalers, large cloud providers, and specialized AI labs. The margins and scale advantages here are huge — which is why companies with deep pockets (and ecosystems) often dominate training markets. Current evidence suggests a strong lead for GPU ecosystems, although cloud ASICs are increasingly challenging that dominance for certain workloads.

Inference

- Needs: low latency, power efficiency, small model optimizations (quantization, pruning), deterministic performance at the edge or in production cloud services.

- Typical chips: NPUs, optimized inference GPUs, edge ASICs (Inferentia-like), FPGAs, and mobile SoCs with dedicated accelerators.

- Economics: inference is enormous in aggregate (every deployed model creates inference demand). It favors power-efficient designs and is more fragmented — many vendors can compete effectively if they hit power/latency targets. MarketsandMarkets and other firms project very large TAMs for inference, specifically as GenAI services move into production.